Live from New York, It’s Ethical Tech!

We’re sharing the highlights from our recent in-person event in New York where we gathered a panel of marketing and privacy to discuss our survey and how consumers feel about tech ethics.

Consumers’ demand for privacy and transparency will change how forward-thinking organizations do business. As our panel discussed, implementing ethical standards requires whole organizations to take ethics seriously.

We recently partnered with our friends at Ketch to host a panel discussion on the ethical use of AI. The panel featured:

Arielle Garcia, the Founder of ASG Solutions and previously Chief Privacy Officer at UM,

Raashee Gupta Erry, Founder and CEO of Uplevel and Board Member of the Ethical Tech Project, and

(moderator) Jonathan Joseph, Head of Solutions at Ketch

The room was packed and the conversation flowed from our national survey to how marketers are putting democracy at risk. We love connecting with our supporters across the country, we’ll update you as we announce future events!

Since we couldn’t fit all our readers in the room, below is a quick recap of the highlights from the discussion for you all this week. Enjoy!

Where Are Consumers Most Excited or Concerned about AI

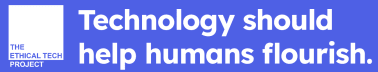

As we’ve discussed before, not all applications of AI are viewed the same way.

Raashee emphasized that the adoption of AI comes down to the relative risk and reward. For example, the risk of an AI-powered translation service malfunctioning is far less than an autonomous car or critical financial service, and consumers inherently understand that and want to see the appropriate safeguards in place. Adoption of AI will be staggered according to the degree of risk in automating a given role.

As Arielle then emphasized, at the end of the day, any automated service needs to work for consumers and not just help companies cut costs. “The customer service example is one of my favorite ones because, to some extent, yes, chatbots provide some convenience that people appreciate. But all of us, I'm sure, have pressed zero a million times when you just need to get to a human because there is no automated response that's going to help you.”

AI Requires a Broader View of Privacy

After discussing the impacts of some of the laws that have been passed related to privacy, the panel described how our notion of privacy is too limited in the Age of AI. Our previous definition of privacy, born from the concerns of abusive marketing practices in the early internet, focuses on how an individual's data is acquired, retained, and then used as inputs for ad-targeting algorithms. We now have to not only worry about the inputs to models but also the outputs. This includes how the data is used and applied, and whether or not those interactions build trust with consumers across the web or lead to greater confusion and manipulation.

As Arielle noted, “I would anticipate that [the impact of AI] is going to be the battleground … what the impacts are on people, on society, on amplifying and proliferating more misinformation, and deep fakes.”

Raashee added that privacy requires changes across not just data use but also product design, the structure of laws, and how those laws are applied. This includes promoting principles like privacy-by-design in products. It also requires the thoughtful application of existing rules and regulations, such as the FTC’s prohibition of unfair and deceptive practices, in a new environment. AI changes the types of data we consume and use, and our legal structures also need to change accordingly to protect consumers.

How do Brands Build a Reputation as an Ethical User of Technology?

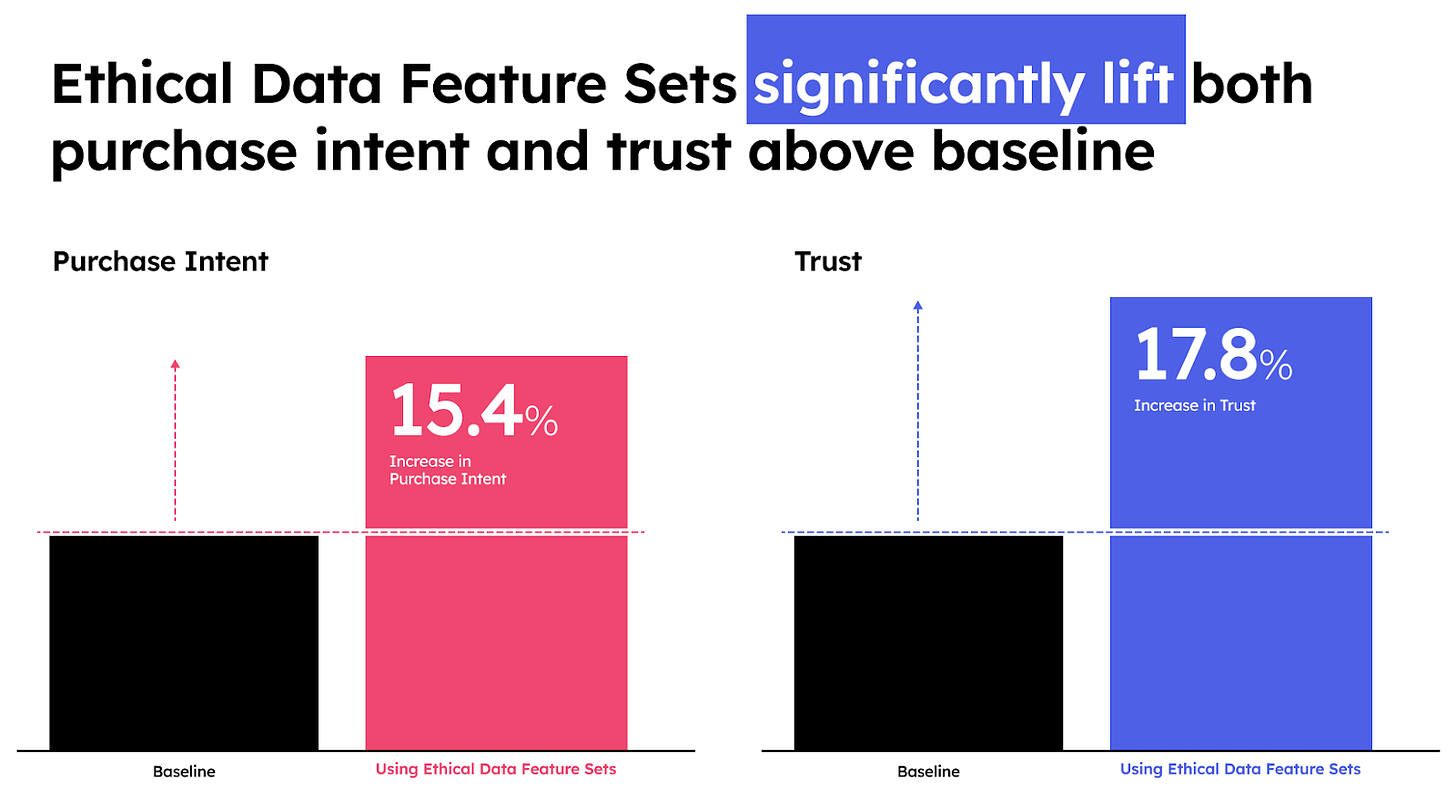

Jonathan cited our survey’s findings that consumer purchase intent increases by over 15% when there are clear ethical data practices, then asked the panel what companies can do to reap the rewards of consumer trust.

Arielle discussed the importance of having clear principles and making sure marketers understand the way data is used by partners: “I think the first thing is to actually get the right stakeholders together and develop a foundational set of principles that guide what you're comfortable using data for, what you're comfortable using AI for, et cetera. The biggest thing, I think, that marketers can do, however, is also consider what their partners are doing … So the practices of those you partner with impact your reputation, and so vendor diligence and understanding how not only your organization is using AI, but especially with this when so much of the tech is powered by the platforms and your agency partners and so on and so forth, it is equally critical to understand their approach.”

Raashee then explained how important it is to actually implement an organization’s beliefs across every function that handles data. This requires auditing the entire customer journey, how they provide and permission data use, where that data sits and is accessed within an organization, how data is analyzed, and how users have the ability to request deletion of their data.

Bonus Q&A: How to Improve Democracy Through Data Usage?

In the Q&A section, a correspondent from the Press Gazette asked about the impact of privacy policy on democracy. Arielle emphasized the way spending marketing dollars on the biggest tech platforms is undermining democracy and local journalism. She then offered 3 ways to change things:

Improved public policy,

Consumer Advocacy, and

Putting pressure on the “buy side” of the ecosystem, the marketers within companies spending ad dollars on big tech platforms to drive sales.

Tell us your thoughts! What are the most important steps we can take to make sure tech works to strengthen, not weaken, democracy?

What We’re Reading On Ethical (and Non-Ethical) Tech This Week:

VCs and Tech Companies at Odds Over AI Regulation - The Information

AI Companies Must Rethink How They Pay For Data, Lanier says - Bloomberg