Consumers Want AI Companies Held Accountable - Is Anyone Up To The Task?

People agree on the need for rules for AI companies but have mixed opinions about who they trust to enforce those rules

As the capabilities of AI continue to emerge, our polling shows Americans are united in their desire to hold companies accountable. But the question remains: if a company does something wrong, who is responsible for assessing the wrongdoing, and who will mete out the appropriate consequences?

A special holiday greeting to our readers. Today marks the last of our posts exploring how consumers feel about our 5 core principles based on data from our national poll. This week we are looking at accountability after looking at fairness last week.

Accountability: A business’s technology and its employees must do what it says it will do system and organization-wide. We envision a world where if entities don’t do right by people, businesses are held accountable.

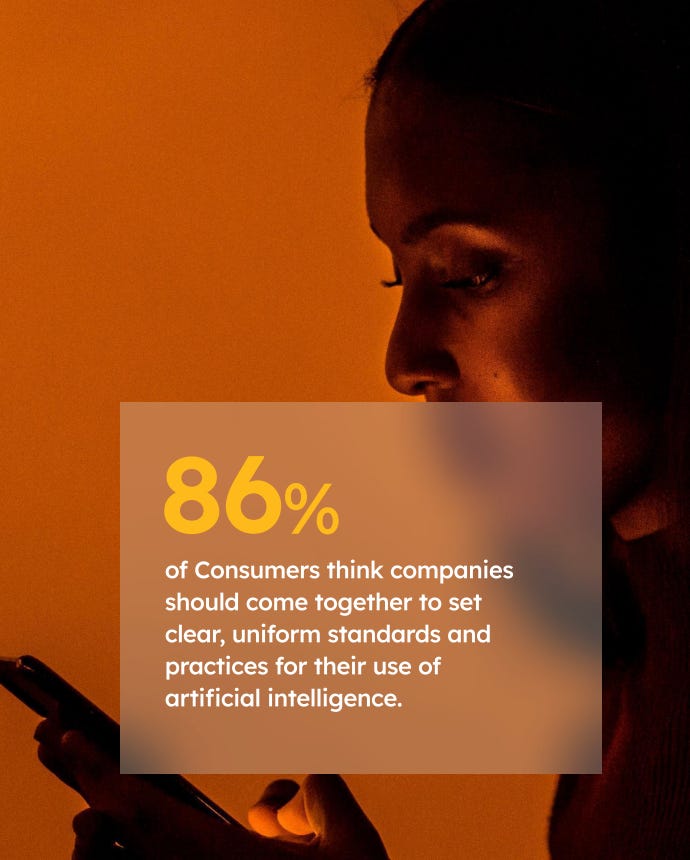

Consumers Want Clear Rules for AI Companies…

It is hard to understand how AI will overhaul society. With so much uncertainty, most consumers are demanding clear standards and rules for how companies use AI. That’s why 86% of our respondents agreed on the need for rules. Consumers have seen how the lack of rules governing social media has put children at risk, fostered the spread of misinformation, and allowed foreign adversaries to meddle in U.S. elections. They do not want to see the same thing happen with AI.

Most consumers are comfortable with the government playing a central role. When we asked whether, “Additional government regulation is required to ensure the responsible use of artificial intelligence,” 77% agreed. Our polling was completed before President Biden’s Executive Order, so we do not know their reaction to the specific policies coming from the White House, but they are clearly comfortable with more rules focused on protecting consumers from harm.

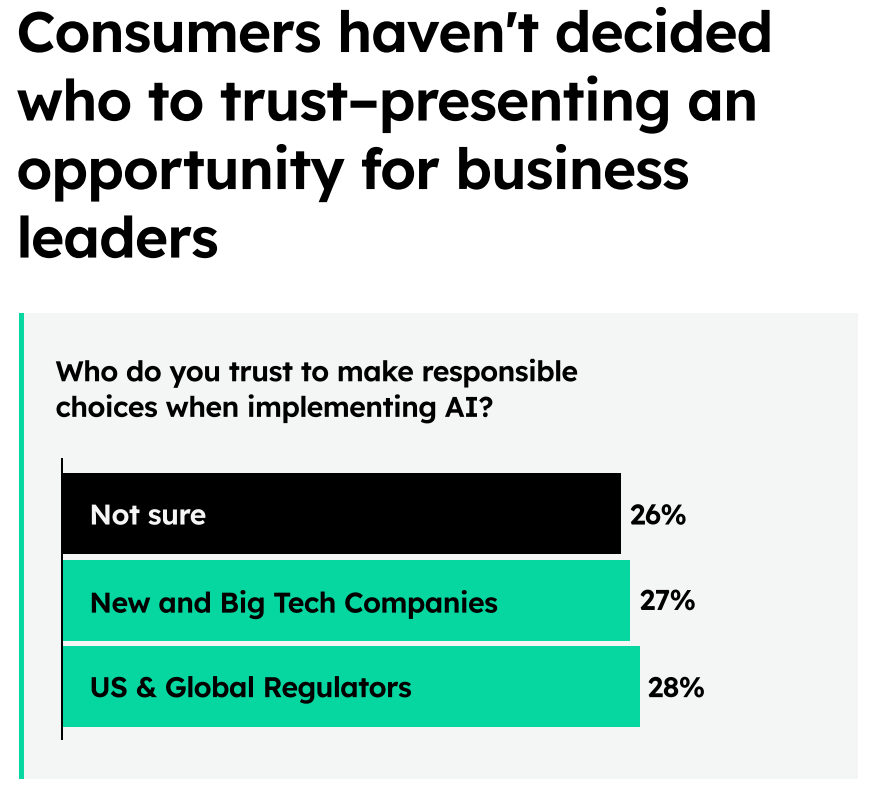

However, while people want protections, they do not agree on who will create them. We live in an era defined by an unprecedented lack of trust in government. Big tech companies have repeatedly violated consumer protection rules. Someone has to oversee rules for AI companies, but it is not clear who it should be.

… But They Are Not Sure Who to Trust

What do we make of this data? While regulators are the people’s top pick as the most trusted organization, the margin between regulators, tech companies, and “not sure” are close enough to suggest people do not know who to trust.

As mentioned above, governments are starting to tackle AI issues. The question is whether these actions will do enough to win the trust of consumers. Both President Biden’s AI Executive Order and the EU’s AI Act are attempting to provide guidelines for AI companies. Not only did our poll show that more than three-quarters of our respondents support some form of government regulation, more than half (52%) also viewed data privacy laws favorably. Together, these stats suggest consumers want some level of public sector involvement. Thoughtful, well-crafted AI rules could be a way for elected leaders to build credibility by protecting consumer interests. We will be watching to see whether the Executive Order and AI Act actually translate to meaningful consumer trust and show that the public sector can hold AI companies accountable.

The arrival of AI’s novel, emerging capabilities has launched society into uncharted waters, and people want to see experts hold AI companies accountable. Our data has shown how consumers will reward ethical tech companies. We suspect consumers will also reward leaders who put people first and take the bold steps required to make sure AI technology develops in an ethical, responsible way.

Tell us your thoughts! Who do you trust to hold AI accountable? What rules or regulations would you most like to see put in place?

What We’re Reading On Ethical (and Non-Ethical) Tech This Week:

A policy framework to govern the use of generative AI in political ads - Brookings

Think tank tied to tech billionaires played key role in Biden’s AI order - Politico

L.L. Bean tips the scales in state privacy fight - Politico

Risky Analysis: Assessing and Improving AI Governance Tools - World Privacy Forum

Deloitte Is Looking to AI to Help Avoid Mass Layoffs in Future – Bloomberg

I find it interesting, that there are five main ethical guidelines, that tech companies are aware of, and claim to follow, but yet these same companies, use copy right material to train LLMs, still, even when they are walking a very thin ethical tight rope. I do not agree that material produced by writers, artist, musicians as examples should be used with out the permission of the creator. The New York Times as blocked scraping of their content and ate suing OpenAI for "billions" of dollars for using their content without the Times permission. I feel that the government needs to step in and enforce strong guidelines, so tech companies will not abuse their position with AI. We are quite aware of the mess the World Wide Web is, becaue of the lack of "guardrails", and rules, that were needed, to prevent the chaotic mess it has become, because honestly anyone can post anything on the web, and can convince a large number of people that it is factual, or real. 2024 is a crucial year because it is a election year in this country. AI needs to be watched and the companies held accountable for misinformation and deep fake videos, news, any outlet that produces information that people read and trust to be real news or articles or videos. We have seen what a mess social media is and the damage it has done. People have found platforms to spew hate and misinformation, and the powers that be allow it, and classifies it as "freedom of speech". Freedom of speech is important, but it was meant for political speech, that our Founding Fathers, wanted us to have without being punished, and along the way it has become hate speech. In closing the government needs to step up, take control, and get AI regulated, before someone uses it in a very unethical and dangerous way.