The Profit in Privacy: How Ethical Tech Wins Consumer Trust and Boosts Your Bottom Line

Respecting user data is more than the right thing to do - it’s also a key to maximizing profit.

Business models pitting privacy and profits against one another are outdated, and the future belongs to companies embracing privacy-by-design.

Privacy’s DuckDuckGo Problem

There’s a common problem when we tell people about the importance of ethical tech principles.

While people acknowledge that ethical behavior sounds nice, they consider it an impractical disadvantage for companies fighting for profitability. Too many people cynically believe that building ethical technology is a “nice to have” feature but a relatively low priority for anyone scaling a company.

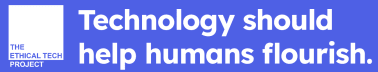

We call this the “ DuckDuckGo Problem,” or the commonly held belief that consumers like the sound of privacy but are not willing to prioritize those features or pay anything extra for it. DuckDuckGo is a privacy-centric search engine that promises to never sell user data. The unfortunate reality is that this search engine only commands 2.5% of the search engine market while Google has 90%. In a case of generalizing from the specific, many assume that DuckDuckGo proves the rule that focusing on user privacy is a losing strategy.

Of course, there’s more to the story. Anyone following the latest antitrust lawsuits knows that Google is on trial after being sued by the government for abusing its market position to monopolize its search dominance. Google confessed to paying over $10 billion a year in agreements with smartphone companies and wireless carriers to be the default search engine, so Google’s dominance over DuckDuckGo is about more than just the importance of privacy.

Abusive monopoly behavior aside, the misperception remains that most people are ambivalent. Sure, we can all think of someone who cares about privacy as a top feature. The only issue is they tend to be obsessive, the people with tape over their webcam who refuse to meet up for drinks unless you message them on Signal. As privacy enthusiasts, we salute these hardcore privacy people, but we realize they are not a representative sample of the U.S. population.

We want to see ethical tech practices implemented for everyone, not just the privacy die-hards. Most large consumer-facing technology is built to appeal to the average user. Until tech companies believe ethical data use is a top priority, it will remain stuck in the hands of the power users. And we have the data that shows that not only do people say they care about privacy, they are willing to reward and pay the companies that offer it as a feature.

We Know People Say They Care About Privacy. Do They Mean it?

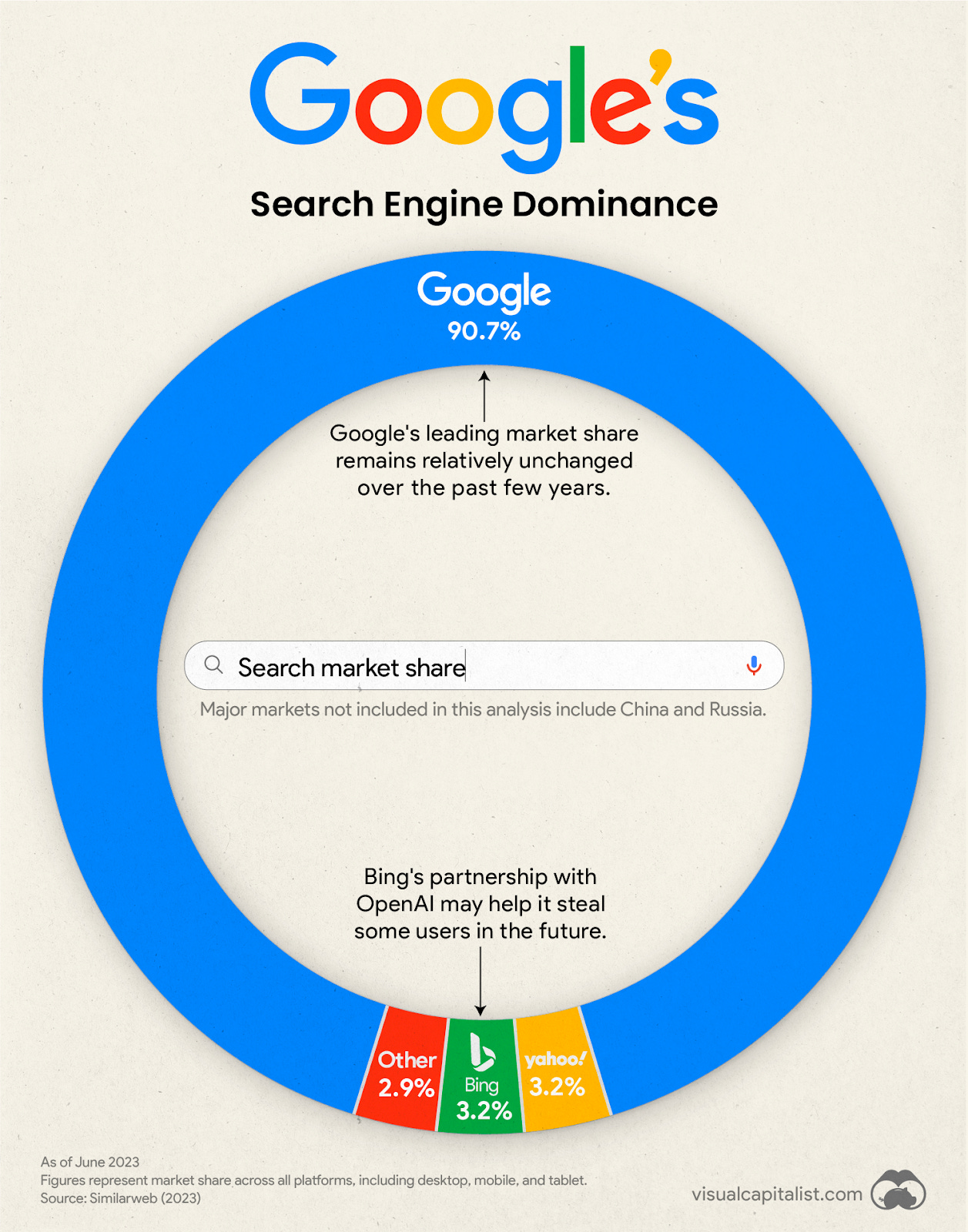

Earlier this year we ran an original, national poll of U.S. consumers to learn how they are thinking about AI. The data from our poll is overwhelmingly clear that people say they care about privacy. Three-quarters of respondents agreed they, “wish they could wave a magic wand and go back to when companies didn’t know so much personal information about all of us.” Nine out of ten respondents said that companies should give consumers choice and control about how their data is used. Getting 90% of Americans to agree on anything these days is a minor miracle, which should be proof enough that companies should make privacy a core product feature.

Most companies understand that privacy is important, the question is whether or not it is important enough to sacrifice other near-term goals. Most companies do not abuse data out of a sick desire to abuse their customers. They do it out of expedience, or lack of alternatives, or simple ignorance of how small choices made in different parts of the company snowball up to rampant disregard for data dignity.

For example, many companies aim to apply data minimization across their operations and get rid of customer data once they are done using it. However, they also are focused on training the most accurate models possible to optimize their services. Advancing product capabilities is typically a higher organizational goal than being ethical for its own sake, as are employee incentives. As a result, organizations frequently choose to hold on to data and fail to put in effective data minimization processes because they focus on keeping as much data as possible for training and improving their models in the future.

Humans are not that different from organizations. Human psychology is wired to fulfill our more immediate urges even when it conflicts with our longer-term goals. Who among us has not woken up on January 1st filled with visions of a year of health only to get a burger and fries for lunch? Human behavior follows incentives, whether that’s the fulfilling rush of a tasty, unhealthy meal or, in the case of companies, greater short-term profit and market share.

The only way ethical tech practices will become mainstream is if we align ethical tech with companies’ incentives.

That’s why we closely examined whether privacy features actually influenced customer decision making. For companies committed to building ethical technology, the results of our study were promising.

Data Consumers Will Reward Privacy Features

The Ethical Tech Project backed an extensive conjoint analysis to fully examine consumer preferences for privacy features. Conjoint analysis is a market research technique where respondents are shown different combinations of product features to determine how they value each feature relative to others.Instead of just asking users whether they like a feature on its own, respondents are shown screens of two different products with two different combinations of features and are then asked to make a choice. By evaluating the set of choices or scenarios in aggregate, we can understand which individual features are more valued by consumers. For our study, we asked respondents to rank a set of standard, baseline features (e.g. 10% off or free shipping) as well as features that aligned with our five core principles (e.g. the ability to revoke data access for privacy, or the confidence that they were buying a product overseen by a 3rd party privacy auditor). We also asked about these various features across three different hypothetical sectors representing a range of real products including a satellite television company, a B2C mattress brand, and a Bahamas resort.

The real value of this methodology is it allows us to get at exactly what we want to know - how much do consumers prioritize privacy in comparison to other features? It turns out, quite a bit!

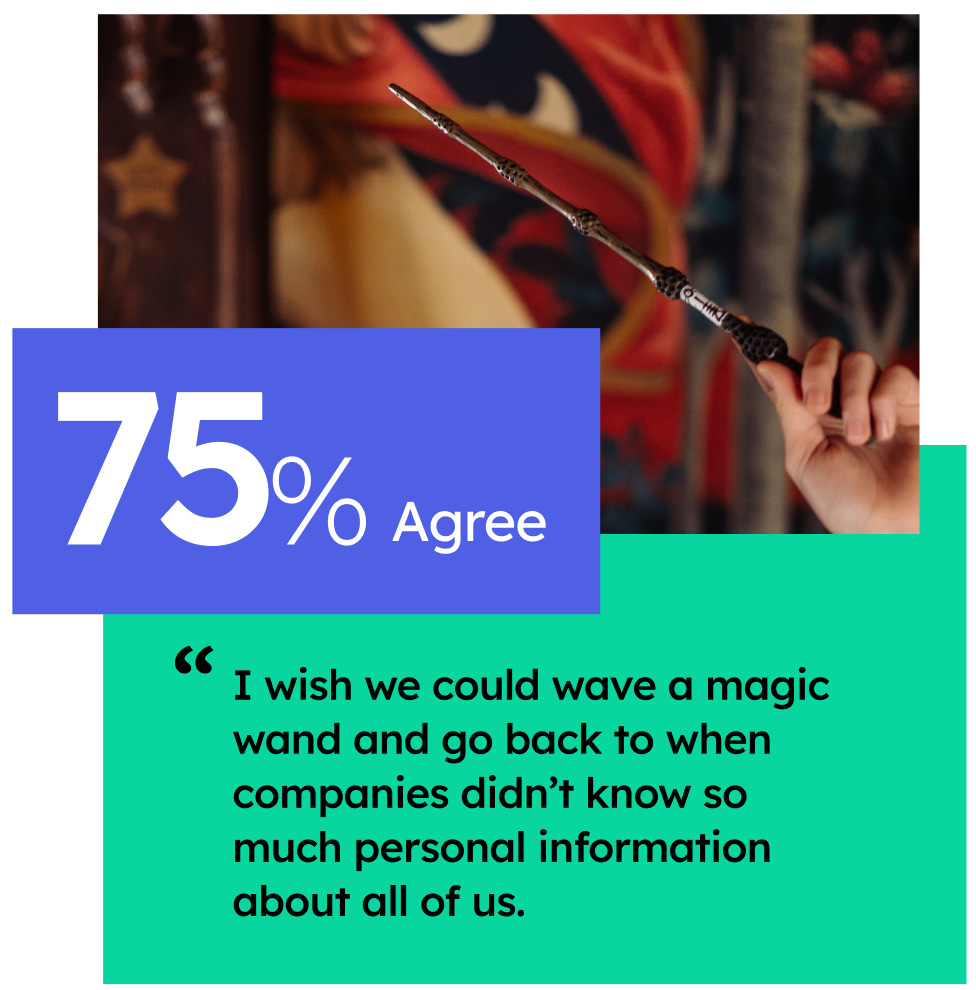

On average, ethical data feature sets not only increased purchase intent by 15%, it also increased trust in the brand by nearly 18% compared to the baseline. Treating customers and their data is more than an ethical choice, it is a profitable one as well. As shown below, this trend was consistent across all types of product features, with agency and privacy being the most popular product features. These findings align with a similar study previously conducted by our partner Ketch, which found responsible data practices led to a 23% increase in purchase intent.

People are tired of not having a say in how their data is used. Products that protect people’s data with strong privacy standards and give users a say in how data is kept will be the ones to benefit from this new era of consumer awareness.

Ethical Data Businesses Will Win the Future

Until recently, the playbook for consumer software companies looking to grow was clear. Create a product that captures people’s attention, collect valuable data on your growing user base, and then take that data and sell it, build a targeted advertising machine, or use it to train all your most valuable models to improve products and fuel further growth. Implementing privacy policies constrained that growth, and who would ever want their business to grow more slowly?

Now things are changing. As shown in the data, privacy features are a key priority for consumers, and the companies that meet this change in consumer demand will be financially rewarded.

Don’t just believe us, look at Apple. The company has made privacy a central pillar of their marketing and brand identity. Not only are they marketing privacy, they have rolled out a whole suite of features giving their customers greater control over their data.

Meta (ironically) has started doing the same thing with Whatsapp to highlight the way end-to-end encryption gives users a way to know their data is secure.

These big tech companies, some of whom have been abusers of personal data rights in the past, are smart enough to know what sells. They do not just want privacy to be at the center of their brands out of the goodness of their hearts. They want to be the brands that people trust to spend their money with as more and more people demand ethical tech products.

The world has changed, and the data is clear that customers are demanding ethical technology that empowers customers to control their data. Features that provide privacy and agency are now an avenue of growth, not an inhibitor, and we are eager to see which companies take advantage of this trend to build better products and profit along the way.

Tell us your thoughts! How have your privacy expectations shifted over time? Which companies do you think do the best job highlighting privacy benefits to the general public?

What We’re Reading On Ethical (and Non-Ethical) Tech This Week:

Meta proposes charging monthly fee for ad-free Instagram and Facebook in Europe - CBS

Clearview AI reaches preliminary deal to settle biometric data privacy lawsuit - Biometricupdate.com

California’s Draft AI Privacy Rules Show Ambitious Approach - Bloomberg Law

Silicon Valley’s AI boom collides with a skeptical Sacramento - Politico