Technologists Can Overcome Biased Data – If They Try

We’ll Forever Imitate the Prejudices of the Past If We Fail to Mitigate Biases In Data

If we don’t build technology that overcomes the inequities of the past, we are doomed to an unfair future powered by biased AI.

After covering Transparency last week, this week we’re looking at the third of our 5 principles: Fairness.

Fairness: Businesses must measure and mitigate the impact of data systems and the outputs in machine learning, intelligent systems, and artificial intelligence that may have disparate impact or bias in application.

Working in a profession governed by physics and logic, engineers often assume their creations are objective and free from bias. Yet the world is messy, and most datasets are even messier. As software continues to “eat the world” and play an increased role in important decision–making, businesses are obligated to ensure their systems are fair for all people.

Unfair technology carries real-life human consequences that can be dire. A landmark investigation by ProPublica in 2016 detailed one horrifying example. COMPAS software, widely used by courts to predict the likelihood that a criminal would commit future crimes and determine sentencing, wrongly misclassified black people as future criminals at twice the rate of their white counterparts.

Similar examples abound. Amazon had to stop using hiring software that demonstrated gender bias. Online ads that suggest arrest records and promote high-interest credit cards have been found to disproportionately target people with black-sounding names. IBM, Amazon, and Microsoft sold facial recognition software to police departments, which was less accurate for non-white individuals, potentially causing mistaken arrests.

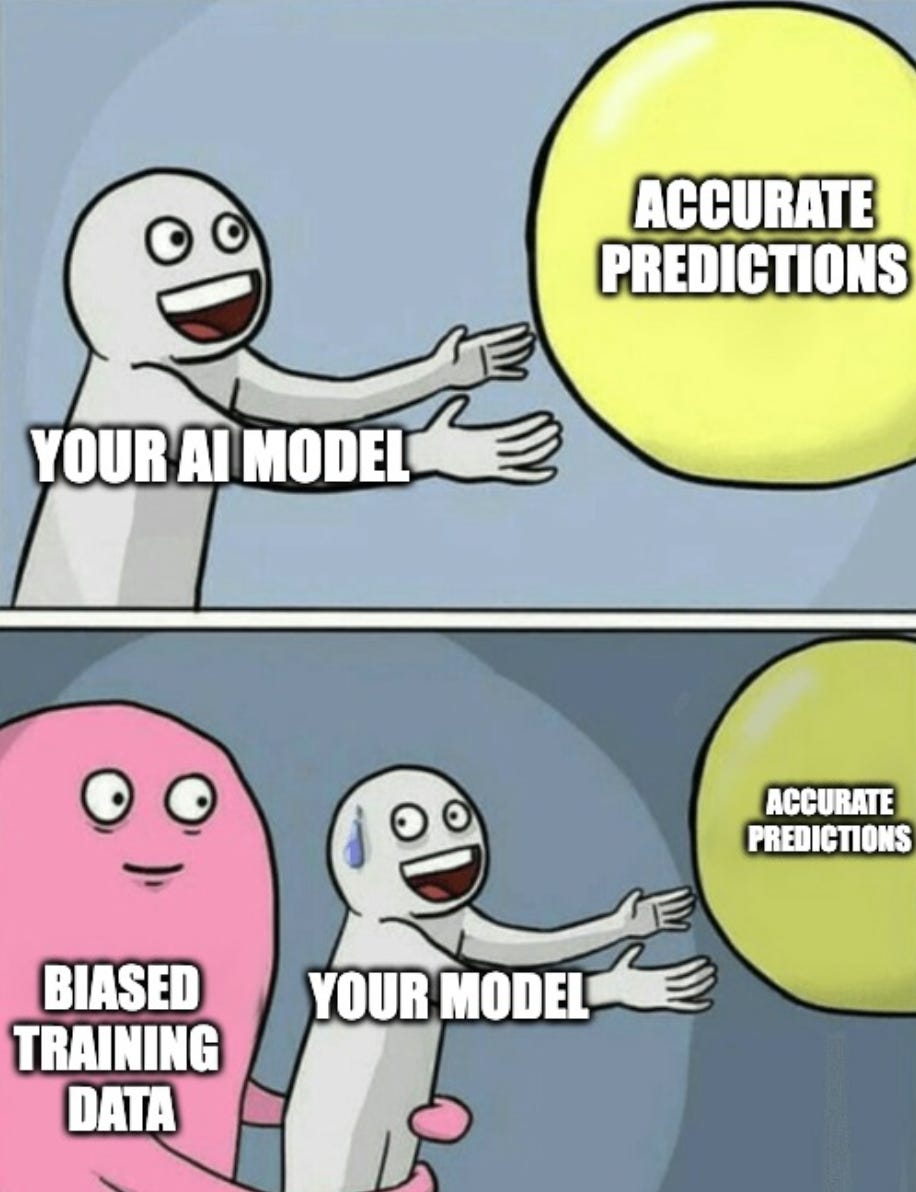

None of this bias was by design. Algorithms are simply methods of predicting future outcomes based on past data, and the problem is that past data reflects pre-existing biases. For example, one study found health systems’ algorithms systematically underestimated the health risks of black patients. Algorithmic bias resulted in black patients being less likely to receive treatment than similarly ill white patients. Incredibly, race was not an explicit variable in the underlying dataset. The culprit was that the algorithm was trained to use health costs as a proxy for health needs, and black patients have historically had less money spent on treating their illnesses.

So long as it’s built on biased data, even the most thoughtful, well-designed algorithm will not be fair. We live in a biased world, and businesses must proactively pursue fairness to create products that treat all people equally. The ongoing AI revolution means more critical systems in our society will involve predictive models. We must promote fair technology to avoid a world filled with AI models that perpetuate past biases.

The big ethical question is: How do we ensure technology is fair when data invariably includes biases?

This topic has gained the attention of lawmakers, and several proposals focus on auditing automated systems for bias. New York passed a law requiring annual audits of any hiring algorithms to check for bias that comes into effect this year. Still, both advocacy groups and companies worry the law either doesn’t go far enough or is unenforceable. California lawmakers proposed a bill requiring similar audits for any automated decision tools used by businesses making impactful decisions across many sectors.

Tell us your thoughts - Are these laws and tools the right approach to creating fair technology? What policies should businesses and governments use to eliminate biases – especially in AI systems?

What We’re Reading On Ethical Tech This Week:

Answering AI’s biggest questions requires an interdisciplinary approach

Tech execs warn lawmakers to keep AI 'under the control of people'